Korzh R.A.

Krivoy Rog national

university, Ukraine

TOWARD

AUTOMATIC MUSIC TRANSCRIPTION: PROBLEMS AND SOLUTION

Introduction

At present, a great number of

developments are dedicated to the problems of automatic music transcription. The start was in 1975, when James

Moorer designed the first automatic control system which could separate duets

of masterpieces [1].

A general approach consists of

the following. Firstly, audio signal decomposes into harmonic series using

different mathematical methods [2]. Secondly, local harmonic peaks are grouping

into harmonic sets by various criteria. And, finally, received information is

used to estimate timbre of musical instruments by using different approaches

[3].

A complete transcription would require that the pitch,

timing and instrument of all the sound events be resolved. As this can be very

hard or even theoretically impossible in some cases, the goal is usually

redefined as being either to notate as many of the constituent sounds as

possible (complete transcription) or

to transcribe only some well-defined part of the music signal, for example the

dominant melody or the most prominent drum sounds (partial transcription) [1].

Critical analysis

According to [4], most of

automatic systems was designed for very particular classes of masterpieces. It

means that they aren't able to convert real music information into an

object-oriented format. Let's consider such kind of restriction in more detail.

Music signals are characteristically non-stationary,

meaning that the sound evolves over time but over sufficiently short periods of

time are often considered to be "quasi-stationary" [5]. This property

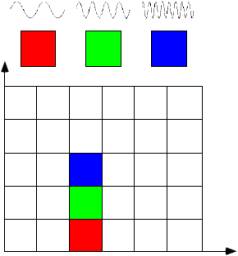

of local stationarity suggests the discrete short-time Fourier transform (fig.

1a) as a signal representation and widely used by the researchers [1]. However,

this approach has the following disadvantages:

-

it is

necessary to provide increased resolution on the high frequency bands;

-

sound object

can be located at the edges of the windows and must be identified like the rest

concentrated at the centre of these functions.

|

Frequency |

|

Frequency |

|

|

Time а) |

Time b) |

||

Figure 1 – Time-frequency resolution: а) Fourier transform, b) wavelet transform

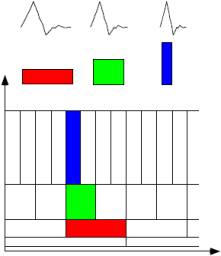

These two problems can be solved by using of wavelet

transform (fig. 1b). Here the basis functions are localized both in the time

domain and in the frequency domain (in contrast of Fourier transform, where

basis functions are sinusoidal envelopes – these functions have an ideal

frequency localization and don't have any time restrictions) and act as a

window functions.

Now, let's consider the

fundamental problems of the complex music analysis:

1.

partial overlapping shows a great influence on a result accuracy, which

can be reflected in either sound merging

or separation of simultaneously

sounding notes;

2.

timbre overlapping, especially for different instrument parts;

3.

spectral resolution – the higher frequency requires the higher resolution

for more detailed spectral analysis of sound wave characteristics;

4.

meter estimation, especially in cases of input music signals with

amplitude-aligned representation.

Taking these aspects into

consideration, it is possible to extract the following widespread restrictions

for today’s development:

1.

musical polyphony degree (restriction of simultaneously sounding objects

within a specific piece of music);

2.

object polyphony degree (restriction of simultaneously sounding objects within

a specific musical instrument);

3.

instrumental polyphony degree (restriction of simultaneously sounding

musical instruments within a specific piece of music).

Description of a proposed method

Figure 4 shows the algorithm of

a new approach which aim is to get the highest possible quality of sound

information to object model conversion by adaptation to the specific sound

data.

Before the arranger picks up

the notes of some masterpiece, he or she, firstly, performs pre-listening and

determines the instrumental polyphony degree. That is, the arranger forms a

list of instruments which are used in the piece of music. And then, he picks up

the parts of each musical instrument separately [6].

This approach has the same idea

as the arranger operations. To prove it let’s describe each block in the

algorithm in more detail (fig. 2).

The block of input data receives the values of

musical signal si (i = 1, 2, …, n). Then, if input data is null or contains a part of a whole audio

signal then the corresponding message is shown (block 3) and the process

repeats until abort or the system receives the correct data. Otherwise, the process

goes to block 4.

In the block of tone envelope generation harmonics are

formed which correspond to the frequency band of piano as the instrument with

the widest harmonic range among the other ones (table 1).

Block 5 performs timbre signal decomposition using

discrete wavelet transform. Harmonics, defined in the previous block are used

as basis functions. It allows to localize timbre components of various musical

instruments.

Block instrument identification defines onset and offset times with the

minimal harmonic components (fig. 3).

Figure 2 – Algorithm of a proposed method

T1 ,

T2 , T3 … TNins

Figure 3 – Т-set

Table 1 – Frequency ranges of some musical

instruments

|

Instrument |

Boundary frequencies, Hz |

|

|

low |

high |

|

|

Piano (Concert piano) |

27 |

4200 |

|

Contrabass |

40 |

300 |

|

Violins |

210 |

2800 |

|

Oboe |

230 |

1480 |

|

Flute |

240 |

2300 |

If T-set is not defined then a corresponding message is shown (block

8). Otherwise, process goes to block 9.

Block time-frequency signal decomposition performs discrete wavelet

transform for each elementary T-image.

Elements of T-set are used as basis

functions.

Scaling operations run

accordingly to the recurrent principle using the relation of a harmonic

distribution in musical instruments [7]. It results in W-set that contains source audio signal decomposed into timbre

components.

Block 10 performs teaching parameter initialization to

determine sound patterns within W-set.

Received information from block 11 is synthesized and converted into MIDI

format (Music Instrument Digital Interface).

Results

The proposed approach is going

to get good results in spectral resolution and in cases of harmonic overlapping

due to wavelet transform algorithms. Timbre crossing can be separated by a

collection of basis wavelet functions and the musical metre can be estimated

after the multipitch analysis when we’ll have enough note information for each

instrumental part of the musical masterpiece.

Conclusions

The critical analysis of

existing methods in the field of automatic music transcription has been

performed. The fundamental problems and negative aspects of extracting sounding

objects have been considered. Finally, a new approach was introduced. It uses

an adaptive scheme which allows learning the character of a specific music

signal.

Its main advantage is that the

conversion of the sound information based on the timbre analysis of a specific

music signal. In contrast to the previous developments the timbre patterns were

defined beforehand and used to all of the audio signals regardless of their

style and class, the proposed method forms a collection of timbre data during

timbre signal decomposition. This approach will increase the quality and

decrease identification errors in the different musical styles.

References:

1.

Klapuri A.

Signal Processing Methods for Music Transcription / A. Klapuri, M. Davy. — Springer,

New York, 2006.

2.

Ellis, D.

Extracting Information from Music Audio / D. Ellis // LabROSA, Dept. of

Electrical Engineering Columbia University, NY, March 15, 2006.

3.

Фадеев,

А. С. Идентификация музыкальных объектов на основе непрерывного вейвлет-преобразования

/ А. С. Фадеев // Диссертация. — Томский политехнический университет. — 2008.

4.

Martin, K. D.

Toward automatic sound source recognition: Identifying musical instruments / K.

D. Martin // Proc. of the 1998 NATO Advanced Study Institute on Computational

Hearing, II Ciocco, Italy, July, 1998.

5.

Лукин,

A. Введение в цифровую обработку

сигналов (математические основы)., Лаборатория компьютерной графики и

мультимедиа, МГУ, 2007.

6.

Андреева,

А. В. Развитие звуковысотного слуха младших школьников при обучении игре на

скрипке / А. В. Андреева // Диссертация. — Нижний Новгород. — 2009.

7.

Способин,

И. В. Элементарная теория музыки. — Государственное музыкальное издательство. —

М. — 1963.