M.Sc. Ospanov M.G.

A.Baitursynov Kostanay State University, Kostanay

Model image recognition.

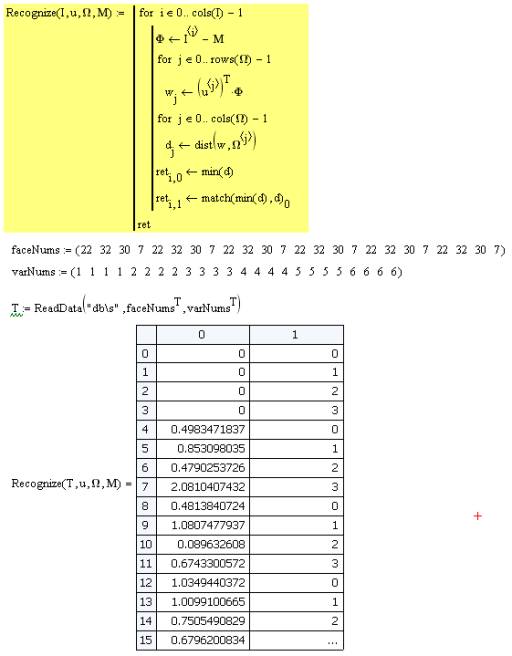

The model is in the

article "Image recognition. Creating a model". Will test.

We create a new selection of 24 items. First 4re element are the same as in the

training set. The remaining options are different from the training set of

images:

Next load the data, and pass into

the procedure Recognize. It is averaged over each image is displayed in the

space of the principal components are weight w. Once known vector w is

necessary to determine which of the existing facilities it is the closest. To

do this, use the function dist (instead of the classical Euclidean distance in

pattern recognition problems is better to use another metric: Mahalanobis

distance). Is the minimum distance and the index of the object to which this

image is the closest.

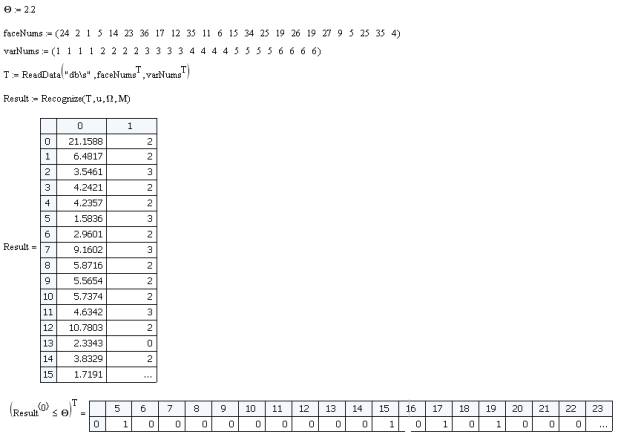

On a sample of 24 objects shown

above 100% efficiency of the classifier. But there is one nuance. If we have to

apply to the input image, which is not in the source database, there will still

be calculated vector w and found the minimum distance. Therefore, a criterion

for O, if the minimum distance <O means the image belongs to the class of

recognizable, if the minimum distance > O, then such an image in the

database is not. The value of this criterion is chosen empirically. For this

model I chose O = 2.2.

Let's make a sample of persons who

are not in training and see how well the classifier will eliminate false

samples.

Of the 24 samples have four false positives. Ie efficiency was 83%.

Conclusion

In general, simple and original

algorithm. Once again proves that in spaces of higher dimension "hidden"

a lot of useful information that can be used in different ways. J combined with other advanced techniques eigenface can be applied to

improve the effectiveness of decision tasks.

For example, we have used as a

simple classifier distance classifier. However, we could use a better

classification algorithm, such as Support Vector Machine (SVM method) or a

neural network .

Literature:

1. onionesquereality.wordpress.com/