P.h.D. Kryuchin

O.V., Kryuchina E.I.

Tambov State University

named after G.R. Derzhavin

Adaptive resonance theory and its

implementation using artificial neural networks

The

human brain executes difficult tasks for analyzing the continuous thread of the

sensorial information which it receives from the environment. It distinguishes

important data from the thread of trivial information and adapts to the former.

Then usually it registers the important information in the long-term memory.

Understanding the process of the human memory is very difficult because new

patterns are memorized but old patterns are not forgotten or modified.

Many scientists have tried to analyze the working

process of the human brain using artificial neural networks (ANNs), but

traditional ANNs could not solve the problem of compatibility and plasticity.

Very often after adding new patterns, results of previous training are

destroyed or changed. Sometimes this is not important, for example if there is

a constant group of training vectors, as in this case the results can be

produced cyclically in the process of the training. In structures with

back-propagation training vectors are given to the input layer serially until

the network has been trained to all input of this group. But if a network which

was trained absolutely has memorized a new training vector then it can change

weights and the ANN will need new training [1, 2].

In

the real situation the network is subject to different stimuli and maybe it

never sees the same training vector twice. So the network often is not trained,

it changes weights but it cannot get good results. And there are networks which

cannot be trained if four training vectors are produced cyclically because

these vectors force weights to change without interruption [1].

This

actuality was one of reasons of the development of the adaptive resonance

theory (ART) in 1969. The ART is a theory developed by Stephen Grossberg and

Gail Carpenter on aspects of how the brain processes information. It describes

a number of neural network models which use supervised and unsupervised

learning methods and address problems such as pattern recognition and

prediction [3]. One of the main problems which are solved by ART-structures is

the classification.

The primary

intuition behind the ART model is that object identification and recognition

generally occur as a result of the interaction of “top-down” observer

expectations with “bottom-up” sensory information. The model postulates that

“top-down” expectations take the form of a memory template or prototype that is

then compared with the actual features of an object as detected by the senses.

This comparison gives rise to a measure of category belonging. As long as this

difference between sensation and expectation does not exceed a set threshold

called the “vigilance parameter”, the sensed object will be considered a member

of the expected class [4].

The basic ART

system is an unsupervised learning model. It typically consists of a comparison

field and a recognition field composed of neurons, a vigilance parameter, and a

reset module. The vigilance parameter has considerable influence on the system:

higher vigilance produces highly detailed memories (many, fine-grained

categories), while lower vigilance results in more general memories (fewer,

more-general categories). The comparison field takes an input vector (a

one-dimensional array of values) and transfers it to its best match in the

recognition field. Its best match is the single neuron whose set of weights

(weight vector) most closely matches the input vector. Each recognition field

neuron outputs a negative signal (proportional to that neuron quality of match

to the input vector) to each of the other recognition field neurons and

inhibits their output accordingly. In this way the recognition field exhibits

lateral inhibition, allowing each neuron in it to represent a category to which

input vectors are classified. After the input vector is classified, the reset

module compares the strength of the recognition match to the vigilance parameter.

If the vigilance threshold is met, training commences. Otherwise, if the match

level does not meet the vigilance parameter, the firing recognition neuron is

inhibited until a new input vector is applied; training commences only upon

completion of a search procedure. In the search procedure, recognition neurons

are disabled one by one by the reset function until the vigilance parameter is

satisfied by a recognition match.If no committed neuron's recognition match meets

the vigilance threshold, then an uncommitted neuron is committed and adjusted

towards matching the input vector see

also [5-6].

To

date only few types of ART networks have been developed. These networks

self-organize stable recognition categories in response to arbitrary sequences

of analog input patterns, as well as binary input patterns. Computer

simulations are used to illustrate the dynamics of the system [7].

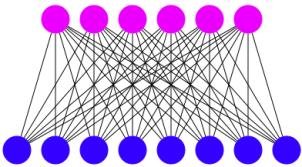

ART

networks consist of two layers. These are the input layer of the comparation

which has L neurons and the output

layer of the clarication which has P neurons. Each neuron of

ithe nput layer is connected to each neuron of the output layer using ascendant

synaptic links (![]() ),

and each neuron of the output layer is connected to each neuron of the input

layer using descending links (

),

and each neuron of the output layer is connected to each neuron of the input

layer using descending links (![]() )

[8]. Figure 1 shows the ART structure in which the input layer (blue) has 8

neurons and the output layer (magenta) has 6 neurons.

)

[8]. Figure 1 shows the ART structure in which the input layer (blue) has 8

neurons and the output layer (magenta) has 6 neurons.

Figure

1: ART structure.

Literature

1.

Êðóãëîâ Â.Â.

Èñêóññòâåííûå íåéðîííûå ñåòè // Ìîñêâà. 2001. (Kruglov V.V. Articial

neural networks // Moscow. 2001.)

2.

Óîññåðìåí Ô.

Íåéðîêîìïüþòåðíàÿ òåõíèêà // Ìîñêâà. Ìèð, 1992. (Wosserman F.

Neurocomputer technica // Moscow. Mir. 1992.)

3.

Grossberg S. Adaptive Pattern Classicationand

Universal Recoding, Feedback, II: Expectation, Olfaction,and Illusions // Bioi.

Cybern. 23. 187 (1976).

4.

O'Meadhra, C.E.; Kenny, A.: Sensory Modal Switching.

Discussion Paper, Multisensory Design Research Group at the National College of

Art and Design 2011

5.

Carpenter G.A., Grossberg S. Category learning and

adaptive pattern recognition: a neural network model // Proceedings, Third Army

Conference Applied Mathematics on and Computing, ARO Report86-1 (1985), P.

37-56.

6.

Carpenter G. A. Grossberg S. Invariant pattern

recognition and recall by an attentive self-organizing ART architecture in a

nonstationary world // Proceedings rst international conference on neural

networks, San Diego (IEEE, New York,1987)

7.

Carpenter G.A., Grossberg S. ART 2: Self-organization

of stable category recognition codes for analog input patterns // Applied

Optics, 26(23), 1987. P. 4919-4930.

8.

Grossberg S. Competitive learning: from interactive

activation to adaptive resonance // CognitiveSci. 11, 23(1987).